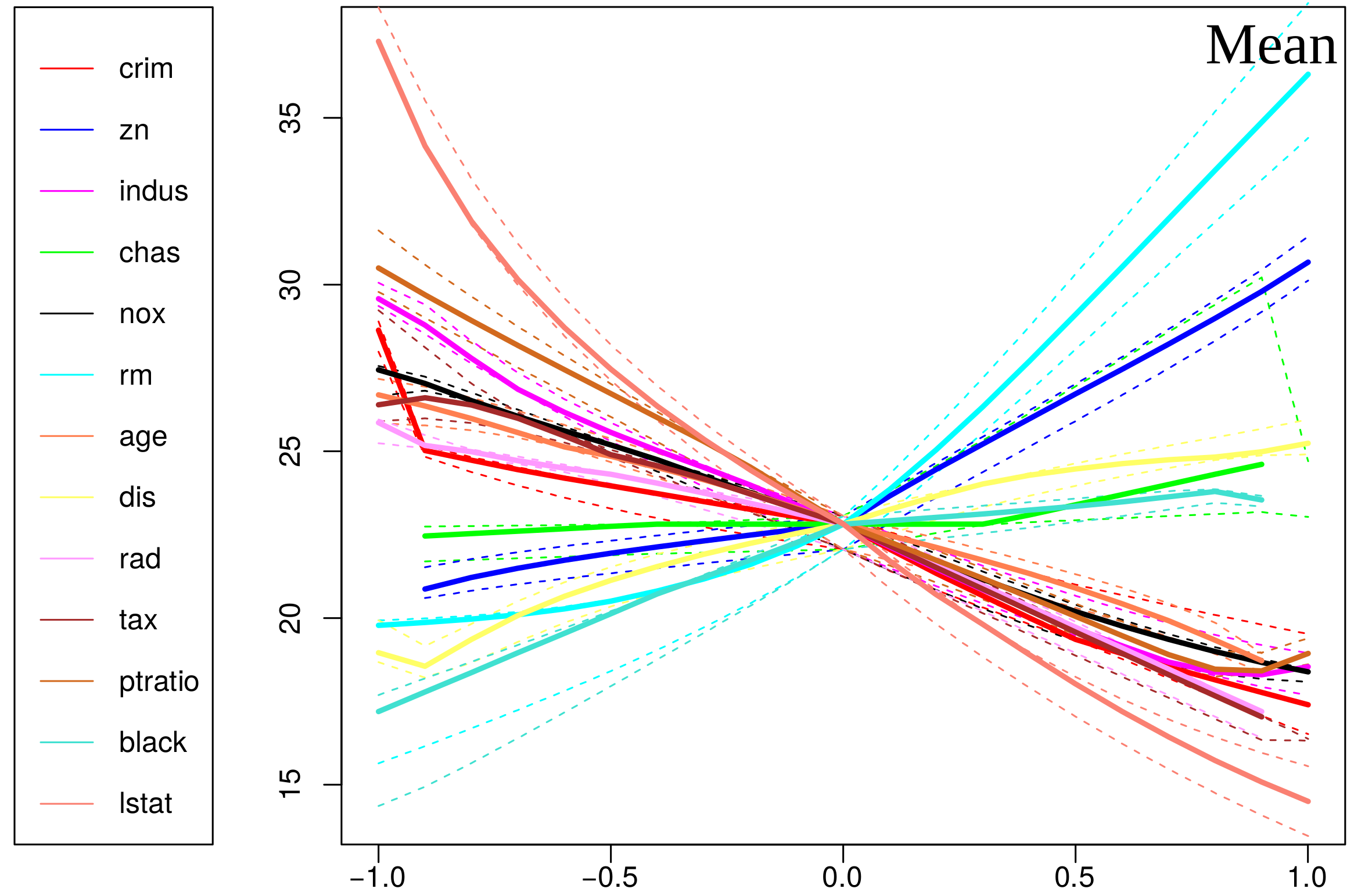

Interpretability, Accountability and Robustness in Machine LearningCNRS - Institut de Mathématiques de Toulouse (2017 - .)3IA-ANITI project (2019 - .)Context:Machine Learning based strategies rely on the fact that a decision rule can be learned using a set of observed labeled observations, denoted the training (or learning) sample. Then the learned decision rules are applied to the whole population, which is assumed to follow the same underlying distribution as the training sample. This principle is illustrated below: Central idea in supervised machine learning: A black-box decision rules model is trained to later predict optimal scores. More specifically, there are input and output training data. The black-box model is trained to transform each observed input so that it optimally fits the corresponding output. Predictions can then be performed using new input data once the model is learned. (illustration from here ) Learning samples may however present biases either due to the presence of a real but unwanted bias in the observations (societal bias, non-representative sample of the whole population, ...) or due to data pre-processing. The goal of my reseach on these questions is then twofolds: The first goal is to detect, to analyze and to remove such biases, which is called fair leaning and is strongly related to the accountability of artificial intelligence algorithms. The second goal is then to understand how biases are created and to provide more robust, certifiable and explainable methods to tackle the distributional effects in machine learning. This work has therefore key applications dealing with societal issues of artificial intelligence. It can also be directly applied to industrial applications where the interpretability, reproducibility and robustness of machine learning algorithms are of high interest. This work was started in 2017 in the context of an AOC team-project reading group dealing with fair learning and initiated by J.M. Loubes. Current projects:Explainability in Artificial IntelligenceA first paper describing the strategy developed at the Mathematics Institute of Toulouse was written in collaboration with F. Bachoc (Mcf. Univ. Toulouse), F. Gamboa (Pr. Univ. Toulouse) and J.M. Loubes (Pr. Univ. Toulouse). It performs global explainability by stressing the variables of a black-box model. This makes it possible to efficiently analyze the particular effect of each variable in the decision rule. A Python toolbox is coming soon. Result of [BGLR19] obtained on the Boston Housing dataset. Each curve highlights the impact of an input parameter of a trained black-box prediction model (here Random Forests) when predicting the price of a house in Boston. Making machine learning algorithms fairI develop of new computationally efficient algorithms to promote fairness with Optimal Transport Cost penalty. This work is carried-out in collaboration with J.M. Loubes (Pr. Univ. Toulouse) and N. Couellan (Pr ENAC)) is in progress.Foundings:2019: CNRS innovation prize obtained on the project Ethik-IA, in order to develop a reference Python package dealing with Interpretability, Fairness and Robustness in Machine Learning. Obtained with J.-M. Loubes (IMT, Univ. Toulouse).2018: Maturation program with Toulouse Tech Transfert, in order to work on practical strategies to detect selection biases in Machine Learning. Obtained with J.-M. Loubes (IMT, Univ. Toulouse) and P. Besse (IMT, INSA Toulouse). Links:Popular Science

Tutorials

Packages and codes |